One of the most difficult and time-consuming tasks for IT Administrators is migrating file shares and their permissions. Before embarking on the migration, some procedures need to be followed to avoid mishaps like broken file systems or lost files.

The most common form of data migration is done by carrying all files and permissions. Microsoft has an inbuilt tool and PowerShell commands used as the migration tools. The migration utility eases the migration process by moving several roles, features, and even the operating system to a new server.

Depending on the prevailing circumstances prompting the migration we need to answer questions like:

1. Are we preserving the existing domain?

2. What are the settings of the old server?

3. Was the server running on a virtual machine?

4. Was the virtual machine on a different platform from the one we are moving files into?

Regardless of the reason behind the migration, different methods can be used to initiate the migration. If the existing server system has some pending issues, you are advised to sort them out before starting the migration process.

Using the Windows Server Migration Tool

We need to install the migration tool to ease the migration process. The Microsoft Server Migration tool will transfer server roles, feature, and some operating system to the destination server.

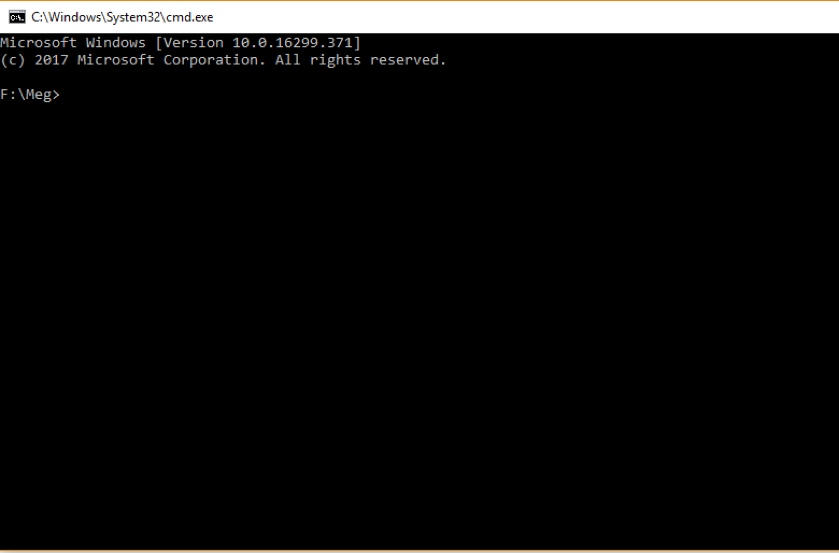

1. To get started, you need to install the migration tool through the PowerShell console using the following command:

Install-WindowsFeature –ServerName DestinationServer

2. Create a deployment folder on the destination server using the smidgeploy.exe utility (it is installed as an additional utility by the above command). To specify some specific attributes, use the following command:

C:\Windows\System32\ServerMigrationTools\SmigDeploy.exe /package /architecture amd64 /os WS08R2 /path <deployment folder path>

3. Create a deployment folder on the destination server, and then transfer its contents to the old server.

4. Use the Remote Desktop Protocol (RDP) to connect to the old server and run the smidgeploy.exe usually found on the following path:

C:\<DeploymentFolder>\SMT_<OS>_<Architecture>

5. After the installation, enable the destination server to accept deployment data. This is done using the PowerShell console using the following command:

Add-PsSnapin microsoft.windows.servermanager.migration

The PSSnapin command will activate all the PowerShell cmdlets.

6. Run the Receive-SmigServerData to open connection to the destination server. The time it takes to open connection is less than five minutes.

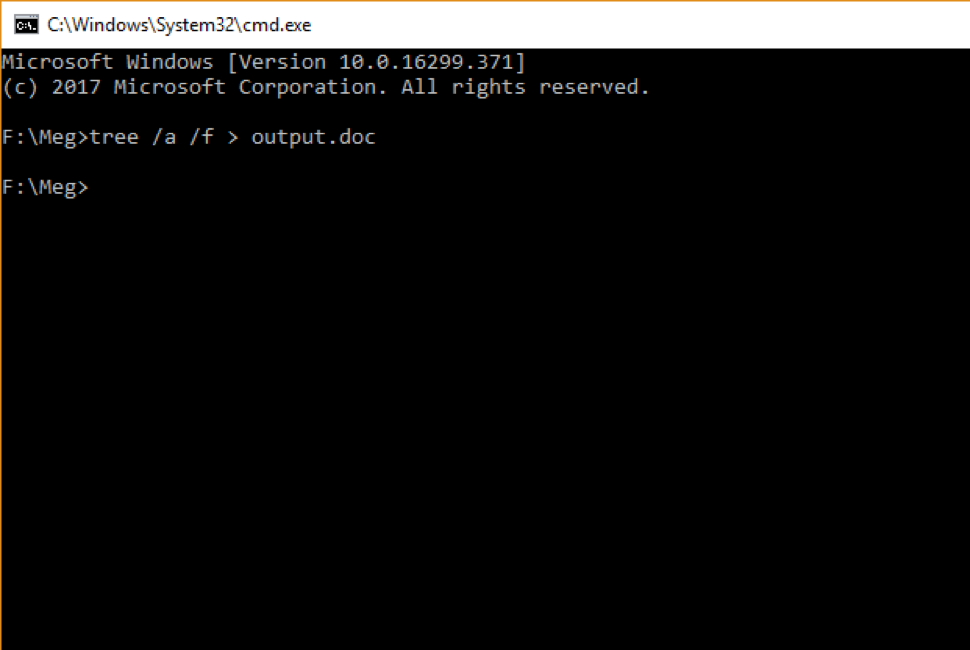

Sending Data to the Destination Server

1. Use the Send-SmigServerData in the PowerShell console. The following command defines the source path (remember the deployment folder that was copied from the destination server):

Send-SmigServerData -ComputerName <DestinationServer> -SourcePath <SourceDeploymentFolder> -DestinationPath <DestinationDeploymentFolder> -Include All –Recurse

2. When prompted for the password, use the password that was issued when running the Receive-SmigServerData on the destination server.

3. When the command completes, all file properties should be transferred to the destination server.

TIP: Confirm that all shares were transferred successfully by using Get-SmbShare in the PowerShell.

Alternatives to Windows Server Migration Tools

This involves taking the most recent backups and restores them on the new server. The backup method restores the data and not the file system. All the file permissions on the new server will be the same as before when they were on the old server. This is a generally fast approach, but the speed depends on file sizes.

1. Using the Free Disk2VHD Tool

If the current server is not virtualized, the Disk2VHD utility from Microsoft is reliable and fast because the subsystem allows the storage of files regardless of their sizes.

All NTFS permissions are retained and transferred to the new drive. The advantage of using this tool is the automatic creation of a fully compatible Hyper-V virtual drive.

2. Copy Utilities

Microsoft has many built-in coper utilities that transfer files with all permissions. The common server migration copy utilities are the XCOPY and ROBOCOPY.

Using XCOPY

The typical command should look like this:

XCOPY “\\sourceServer\ShareName\*.*” “\\destServer\ShareName\” /E /C /H /X /K /I /V /Y >Copy.log 2>CopyErr.Log

The parameters taken by the commander are:

/E – Copies both empty and directories with content.

/C – Copies without acknowledging errors.

/H – Copies all hidden and system files.

/X – Copies file audit settings (implies /O).

/K – Copies attributes; without this attribute will reset read-only attributes.

/I – Creates a directory if the file destination does not exist.

/V – Verifies the size of each new file.

/Y – Suppresses the prompt asking to overwrite existing destination file.

The command will execute and leave the output to a file and a corresponding error log file.

Using ROBOCOPY

The Robocopy command looks similar to this:

ROBOCOPY “\\sourceserver\ShareName” “\\destServer\ShareName” /E /COPYALL /R:0 /LOG:Copy.log /V /NP

The parameters taken by the command are:

/E – Copy all directories and its subdirectories.

/COPYALL – COPY ALL file info.

/R:0 – Number of Retries on failed copies: default 1 million. (When set to 0 it disables retries so that copy can go on uninterrupted.)

/LOG – Output the LOG file status.

/V – Produce output in details.

/NP – No Progress – Copy without displaying the percentage of files copied.

3. File Synchronization or Replication

Microsoft has many inbuilt tools that help system Administrators replicate data between two servers. This is disaster preparedness plan done to ensure data is available at all times.

The Distributed File System Replication (DSFR) is one way of synchronizing the contents between two shares. They can work with the Distributed File Name Space. Using the DFSR enables user shares via the path: \\Domain\share and not \\server\share

Both the DFSR and the DFS can bring together more than two servers to use one share pointing to multiple servers. Using the DFSR is easy when it comes to adding another server on an existing migration configuration.

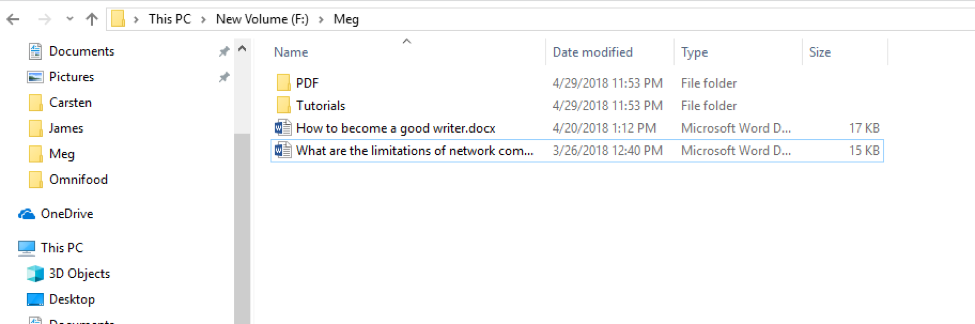

Shares and Permissions

Since Windows 2000, file shares are stored in the registry at:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\services\LanmanServer\Shares

Instead of recreating shares, you can export this key to get all your drive paths and permission used by define shares. Using the registry to export shares means that all drive letters in the new server must match with the old server paths. To avoid any confusion, you are advised to assign same drive letters on both servers.

Conclusion

Whichever way you choose to migrate filesystems should be the most convenient and comfortable for you. All this depends on the level of skill and time needed to reduce the downtime likely to affect server operations.